前言

Oracle 支持强大的闪回,使用场景:

- flashback database:数据库闪回;多用于数据库恢复,数据库、用户、表空间误删。

- flashback table:表闪回;用于数据表恢复;数据表误删。

- flashback query:闪回查询;应用于修复误操作数据。

闪回可以增加管理人员和使用人员的容错率。Long long ago,PostgreSQL 也是支持闪回的 👉🏻 Joseph M. Hellerstein, Looking Back at Postgres. https://arxiv.org/pdf/1901.01973.pdf

Intriguingly, such functionality was declared as one of the objectives of Postgres and was implemented at the very start, but it was removed from the database system when the project support was passed on to the community.

有趣的是,这种功能被宣布为 Postgres 的目标之一,并在一开始就实现了,但是当项目支持移交给社区时,它就从数据库系统中删除了。

因此,目前原生 PostgreSQL 仍不支持闪回,但是也有各种曲线救国的方式,且听我一一道来。

pg_dirtyread

第一个耳熟能详的方式当然是 pg_dirtyread 了,基于 MVCC 的实现原理,数据被删除之后依旧留存在数据页中,直至被 vacuum 清理,因此使用该扩展的前提是需要的数据还没有被清理

- vacuum_defer_cleanup_age,延迟多少个事务再进行清理,但是该参数高版本已被移除,且有 BUG

- 关闭表级 autovacuum_enabled,toast.autovacuum_enabled

1 | CREATE EXTENSION pg_dirtyread; |

所以如果要使用 pg_dirtyread 的话,发现了误删,第一时间关闭表级的 vacuum。

pg_recovery 插件在 pg_dirtyread 的基础上进行了增强,默认只有待找回数据,而 pg_dirtyread 会全部返回,如果大量无效数据,性能也会受到影响。

但是此类插件都无法回滚 DDL,如果是 DROP TABLE 的话,就歇菜了,不过我们也有很多 workaround,比如 pgtrashcan,回收站,像 windows 中的回收站,删除了文件后,是放在回收站的,可以选择还原,找回相关文件。同样,当我们删除表后,表并不会立即物理删除,而是先保存到回收站中,在需要时可以恢复表。当 DROP TABLE 命令执行后,表会被移到一个名为 “Trash” 的模式下,如果想永久删除此表,可以删除 “Trash” 模式下的这张表或者删除整个 “Trash” 模式,这个 pgtrashcan 仅对表有效,其它数据库对像被删除后不会转移到 “Trash” 模式。且该插件

目前对于依赖项识别不清,功能过于简单,难以完成复杂操作的闪回,比如存在依赖项。

甚者,你可以通过事件触发器来做各类限制,比如禁止删表、删列等等。

1 | CREATE OR REPLACE FUNCTION confirm_drop () |

延迟备库

延迟备库论原理来说其实不算是闪回,但是有时或许也能救你一命,比如 drop table,pg_dirtyread 无法实现 DDL 的找回 (基于 MVCC 的原理基本都只能找回 DML 数据),不过 drop column 可以找回。

顾名思义,延迟备库就是设置备库延迟 replay WAL 的时间 (recovery_min_apply_delay 参数控制),而备库依然及时接收主库发送的日志流,只是不是一接收到后就立即应用,而是等待此参数设置的值再进行应用。所以如果你在主机执行了一个没有条件筛选的 DELETE 操作,幡然醒悟。这是备库还没有回放,可以赶紧采取紧急措施补救数据。

但是延迟备库有一个很大的坑,目前 WAL record 只有在提交和回滚的时候包含时间戳,getRecordTimestamp 用于提取 record 中的时间戳,然后记录

Currently, only transaction commit/abort records and restore points contain timestamps.

1 | /* |

1 | postgres=# select pg_switch_wal(); |

那么会有什么危害呢?关于流复制的原理我之前也写过,👉🏻 从一个群友问题看流复制实现原理,是否发送 WAL 与主库的事务是否提交与否没有关系,但主库的事务能否提交却取决于备库的 WAL 写到了哪里 (默认是 on 的话,就需要落盘),即同步级别,备库会实时回放,备库按部就班,原模原样复刻主库的操作。主库回滚我就回滚,主库删除我就删除,所以延迟备库的危害就不难理解了:

比如某个事务包含了 DDL 操作,那么这笔操作马上会在 HOT STANDBY 执行,锁在 HOT STANDBY 也同时被加载。

但是当遇到这个事务的 commit record 时,由于设置了 recovery_min_apply_delay,这笔 record 被延迟执行,这个锁也会延迟到这笔 record 被 apply 为止。在此延迟时间段内,对这个被执行 DDL 的表的 QUERY 都会被堵塞。

让我们看个例子,主库加一列:

1 | postgres=# alter table test_delay add column info text; |

备库查询会被阻塞

1 | postgres=# select pg_backend_pid(); |

锁等待信息也很明显,被 startup 回放进程给阻塞了。不过目前这种危害只针对 commit,rollback 是不会有影响的。

1 | /* |

时间旅行

即常说的 temporal table——时态表,此类数据库随着时间的推移跟踪数据库内容的历史记录,自动保留所述历史记录并允许对其进行更改和查询。https://wiki.postgresql.org/wiki/Temporal_Extensions,以 temporal_tables 插件为例,通过它你可以快速回溯到任何时间点的数据状态,该插件还可以解决事务冲突,以及为系统版本控制表设置系统时间,比如 SELECT set_system_time(‘1985-08-08 06:42:00+08’);,不过目前仅支持系统时间 (事务时间)。

A temporal table is a table that records the period of time when a row is valid. There are two types of periods: the application period (also known as valid-time or business-time) and the system period (also known as transaction-time).

Temporal Tables 适用于多个场景,包括但不限于:

- 审计与合规:企业需要跟踪并证明数据何时何地被更改,以满足法规要求。

- 错误修复:如果由于错误导致数据不一致,可以轻松回滚到错误发生之前的状态。

- 数据洞察:分析历史趋势,了解业务发展的动态过程。

- 版本控制:软件开发中,数据库结构也需要版本控制,Temporal Tables 为此提供了便利。

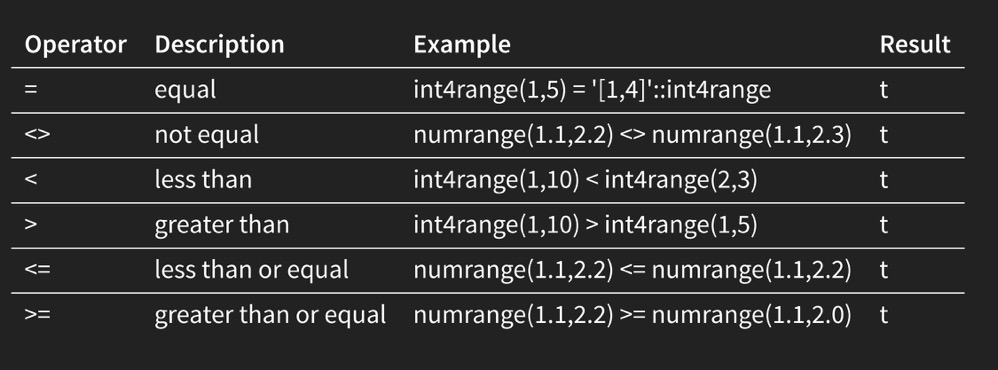

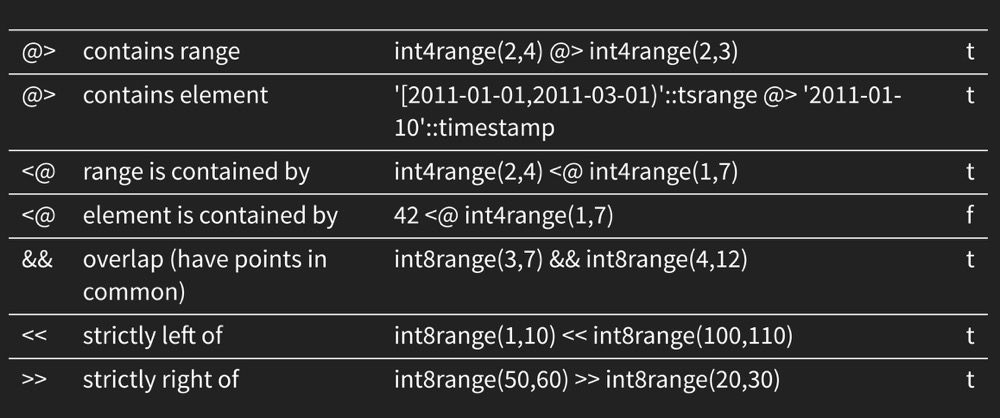

借此,我们也可以基于其实现闪回查询。得益于原生 PostgreSQL 对于范围类型的支持

以官网为例,其原理实际上是基于触发器来做的

1 | postgres=# \d employees |

假如误删了数据,根据起始时间和结束时间找到指定时间段的数据即可。

1 | postgres=# SELECT name,department,salary,lower(sys_period) AS start_time, upper(sys_period) AS end_time from employees_history ; |

还有个类似插件是 https://github.com/ifad/chronomodel,不过要略显复杂。其还依赖于 btree_gist 插件,btree_gist 对于范围查询的性能很挫,更新开销也要更大。

ChronoModel implements what Oracle sells as “Flashback Queries”, with standard SQL on free PostgreSQL. Academically speaking, ChronoModel implements a Type-2 Slowly-Changing Dimension with history tables.

All history keeping happens inside the database system, freeing application code from having to deal with it. ChronoModel implements all the required features in Ruby on Rails’ ORM to leverage the database temporal structure beneath.

https://github.com/nearform/temporal_tables 这个插件则是Rewrite of Temporal Tables Extension as a trigger,将 temporal_tables 进行了改写。

walminer

walminer 想必使用过 PostgreSQL 的都知道,通过解析 WAL,反向生成 UNDO SQL,类似于 binlog2sql 但是其使用有门槛,4.0 也需要 license 了 (不贵,就一杯 coffee 的事情,多多支持作者,开源作者不易)。4.0 进行了极大增强,更多细节请参考:https://gitee.com/movead/XLogMiner

walminer是PostgreSQL的wal日志解析工具,4.0版本摒弃插件模式改为bin模式,现已脱离对目标数据库的编译依赖和安装依赖,一个walminer工具可以解析PG10~PG15的WAL日志。 现已实现的功能为wal2sql、fosync、pgto、waldump。

supa_audit

此类插件类似于将表上所有的日志行为进行审计,追踪表上做了些什么操作,其他能达到类似审计效果的比如 pg_audit、pg_log_userqueries、pgreplay、audit-trigger-pg96 等,也是类似原理,记录在审计日志中。以其官方例子为例:

1 | -- Insert a record |

记录的行为十分详尽,不过我没有亲自尝试过,对于事务冲突的处理能力如何尚未验证,感兴趣的读者可以自行测验。

pg_auditor

该插件和 supa_audit 类似,它依赖于 hstore 插件,它还支持一个十分粗糙的 flashback

PostgreSQL auditing extension that records each data modification statement of specific tables, and allows partial or complete flashback of transactions.

The

hstoreextension is required in order to store table row versions.

pg_auditorprovides an interface to put specific tables under audit monitoring, by keeping log of every change of the data being made (by either INSERT, UPDATE or DELETE statements). When all the three data modification statements are recorded, it is possible to make a complete transaction flashback, thus restoring the data to a previous state.

如果记录了 INSERT,UPDATE,DELETE,那么其还可以模仿 flashback,通过调用 auditor.flashback + 指定事务号即可。

1 | postgres=# SELECT auditor.flashback(5556); |

增强版复制槽

我们知道,逻辑解码的时刻基于创建复制槽的那个一致性位点状态,假如现在有这样一个操作流:

- T1时刻:开启一个长事务,做了相关更改,但不提交

- T2时刻:开启逻辑解码

- T3时刻:T1事务提交

那么是否可以将 T1 ~ T3 这段时间的操作 decoding 出来?实际上这是一个伪命题,逻辑解码这个动作会被阻塞。因此,我们无法创建任意时间点 (指定 LSN) 的复制槽,但是 pg_tm_aux 插件可以,他就是为了填补这块的空缺。

1 | postgres=# \dx+ pg_tm_aux |

举个栗子

1 | postgres=# create table test_decoding(id int,info text); |

然后我基于 A/D7090FF8 创建一个复制槽,我就可以成功的将后面的两条数据给解码出来了。此处因为我没有配置身份标识,所以无法解析 UPDATE 和 DELETE。

1 | [postgres@mypg ~]$ pg_recvlogical -d postgres --slot myslot --start -o pretty-print=1 -o add-msg-prefixes=wal2json -f - |

不过关于逻辑解码的弊端我之前也聊过,对于 OLD KEYS,依赖于 replica identity,要解析 DELETE 的 OLD KEYS,最好需要搭配 replica full,如果表包括 TOAST,并且业务行为需要获取更改后的值 (NEW) 以及更改前的值 (OLD),那么将复制标识设为 FULL 可以使 PostgreSQL 也将额外的 TOAST 列解析出来,即使没有操作 TOAST 列。但是在 16 版本以前,如果是 FULL,很容易将订阅端拖垮,因此需要妥善权衡其中利弊。16 以后的话会利用 BTREE,订阅端的效率就可以大大提升。pg_tm_aux 的更多妙用各位读者就自行探索吧。

其他

一些其他工具我这里没有过多介绍,可以参照我之前写的文章,如何抢救被删的数据

比如 pg_fix,pg_resetwal,pg_waldump,pg_filedump 等等。

小结

关于闪回,其实很早之前 PostgreSQL 原生是支持的,不过后面移交给社区之后,就被移除了,也算是一大遗憾吧,毕竟闪回很多时候能救命。

参考

https://zhuanlan.zhihu.com/p/109132267